About a decade ago, I built a raytracer in C++ using the well-known "Ray Tracing in One Weekend" guide. It was one of the most satisfying programming projects I've ever done 🧘♂️

A few years later, I started rewriting it in Go as a way to learn the language. Like most of my side projects, it enjoyed a brief, hopeful life before being laid to rest in the ever-growing digital graveyard 🪦

Fast-forward to now, and I've been experimenting with AI coding tools, specifically, Claude Code. It has an interesting and unexpected capability for a coding focused tool: it can look at images.

🌈 A quick intro to Raytracing

Raytracing simulates how rays of light bounce around a 3D scene. It's slow and computationally expensive, but it gives beautiful and realistic images.

This is different to rasterization, which is what game engines typically use. Which is much faster, but not as precise.

🔁 Teaching Claude to iterate, Visually

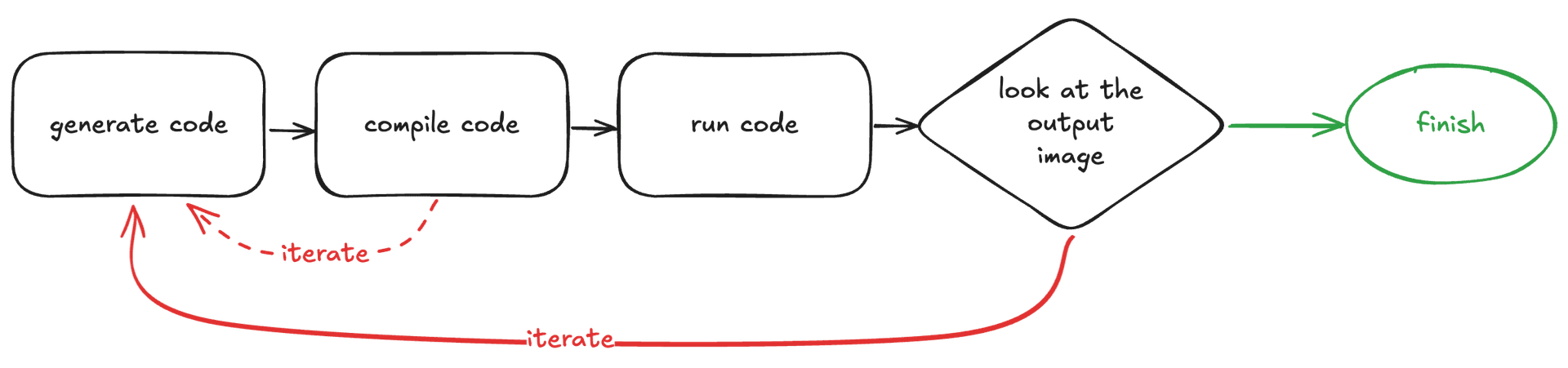

Claude already understands that code needs to compile and that unit tests should pass. But this isn't enough, it could fail when attempting to build features, as although the code would run, there'd be obvious errors in the final image.

I wanted to expand on this loop, with an extra step to look at the final image. I was able to instruct Claude to do this, by updating the project's CLAUDE.md file.

A side-note on unit tests

These are typically a very good tool for AI coding agents. But in the context of a Raytracer, they aren't as useful. They could cover very basic functions, which wouldn't add much value. But it's not really possible to unit test a whole frame.

🎯 One-shotting new features

Now that the feedback loop was in place, my dream was to be able to "one-shot" some new features. That is, only the initial prompt, and no interventions on my behalf, to a fully working feature.

My raytracer initially only supported spheres. So I asked Claude to implement boxes.

It planned its approach, wrote the intersection logic, generated a frame, but not all had gone to plan, it spotted a problem:

It then tried again, and proclaimed:

- Front face (facing camera) - now properly lit without the dark artifact

- Top face - correctly receiving light with proper brightness

- Side edge - clean transition between faces

- Contact shadow - realistic shadow where box meets floor

The improved normal calculation based on which slab was actually hit during intersection has resolved the shading issue. The box now renders with correct lighting on all visible faces.

This is exactly the process that I had done as a human developer: render the frame, give it a look, and iterate from there. It'd be very rare that I would've made a new feature work on my first attempt. So maybe it's reasonable to expect an AI to do the same

⚡ Increasing the performance

Raytracing is very computationally expensive. In my implementation - a few objects, a 300x600 resolution, it takes ~3 seconds to render a frame on an M3 Pro Macbook Pro.

I tasked Claude with improving the performance. Its first idea: bounding boxes.

❌ A failed attempt with bounding boxes

The idea of bounding boxes is to first check if a ray is going to hit an object's bounding box, before attempting to calculate if a ray has hit your object. This can increase performance, as calculating if a ray has hit the bounding box can be much cheaper than calculating if it hits the object inside the box.

Claude implemented bounding boxes, and then as AI often can, it confidently proclaimed it'd increased performance. But when I actually ran the code, Claude had made it slower. 🤦♂️

Why? Because intersecting a sphere is already cheap. Adding bounding boxes to it merely introduced extra overhead.

Despite this failure, I still wanted to increase performance. So I added some stats about the frame render time to the output, and then told Claude to consider this in its reasoning.

✅ Nailing it with adaptive sampling

Claude then suggested adaptive sampling. My raytracer was always doing 300 samples per pixel, even for the background and the floor, which was completely unnecessary. Adaptive sampling will start with a low number of samples, and only adds more if there's a high variance between them.

Claude implemented this with a tunable varianceThreshold. Taking an initial guess at what the value should be. On its first try, it noted a faster render, but also caught that the output was noisy.

Hover to compare: noisy output vs. clean result

It then did a few iterations of decreasing the threshold. Until it got back to the original quality, but with a ~2x speedup.

🤔 A different kind of building

I had a lot of fun with this project. Maybe as much as when I originally built a raytracer in C++ 10 years ago.

I got the project to a point where Claude could one-shot fairly chunky features. It would suggest what to build next, then go and implement it with no input. Which leads me to a question: Can I still say I built this raytracer?

10 years ago, it was unambiguously me who built it. But now, I'm just stating features and Claude is building them, and then even suggesting the next feature to build.

I assume Claude was trained on plenty of raytracer implementations, including some written in Go. So I wonder if this is less me guiding the implementation, and more copying from existing ones.

This isn't the experience I've had when using AI tools at work. There, I'm typically working with much larger codebases, with bespoke logic, code that hasn't been written by anyone before. In that context, AI is just a helper, and I'd say I'm still the one who's writing the code.

To wrap-up, I had tonnes of fun 💚 And you can check out the project on GitHub